Contents

This page is in group Technology and is about a facet of multi-threaded concurrent programming where it’s an important matter that more or less independent sw-processes shall not make running difficult for the others.

Intro

During my previous blog notes, especially «Not so blocking after all» I stumbled upon a fact: concurrency may be used as an adjective: there’s more or less concurrency! Or concurrency is more or less limited! Or even complete!

I remember the Duracell Bunny from the seventies, how the only one in a crowd of other battery brand bunnies was able to drum long after the others had run out of power. But a recent animation gave me some new insight:

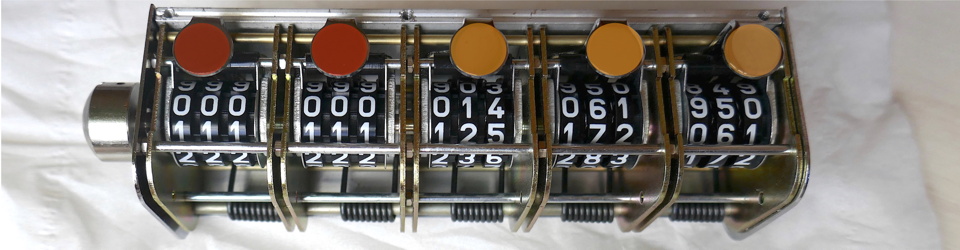

Figure 1: Duracell bunnies. Picture 1: Duracell; 2-6: from Bunny Power animation. See [7]

The bunnies start running alone in picture 2, then they meet and hold hands in 3; then they progress in 4-6. Is there a degree of independentness of the running here? Can they reach their common goals if they don’t agree on something, some part along the path? Of course not! So, is there a degree of concurrency?

The first time I discovered about this «degreeness» was in the Clojure concurrency documentation [1]:

commute. Must be called in a transaction. … Thus fun should be commutative, or, failing that, you must accept last-one-in-wins behavior. commute allows for more concurrency than ref-set. (here)

Then I asked about what the «metric of concurrency» was in a Clojure discussion group thread, referenced from here. Here’s from the answer by Leon Grapenthin:

How much happens at the same time in contrast to being queued up. Remember that only successful transactions affect the outside world. E. g.: Of two successful transactions, one had to be restarted because of the other having completed during its first try => The two successful transactions didn’t happen in parallel. Using commute reduces the potential of a retry and thus allows more successful transactions in parallel => more concurrency (here).

Then, for the n’th time I reread the Non-blocking algorithm article (Wiki-refs below) and found in Kogan and Petrank’s paper «Wait-free queues with multiple enqueuers and dequeuers» [2] that

Abstract: The queue data structure is fundamental and ubiquitous. Lock-free versions of the queue are well known. However, an important open question is whether practical wait-free queues exist. Until now, only versions with limited concurrency were proposed. In this paper we provide a design for a practical wait-free queue. Our construction is based on the highly efficient lock-free queue of Michael and Scott. To achieve wait-freedom, we employ a priority-based helping scheme in which faster threads help the slower peers to complete their pending operations. We have implemented our scheme on multicore machines and present performance measurements comparing our implementation with that of Michael and Scott in several system configurations. (From the open Abstract at ACM)

(Aside: I have more on Clojure here) Also, in the paper that Kogan and Petrank build their solution on (by Micheal and Scott [3], also in 1993) concurrency was an adjective:

Lamport [.] presents a wait-free algorithm that restricts concurrency to a single enqueuer and a single dequeuer. … Valois [..] presents a list-based non-blocking algorithm that avoids the contention caused by the snapshots of Prakash et al.’s algorithm and allows more concurrency by keeping a dummy node at the head.. …The algorithm employs separate Head and Tail locks, to allow complete concurrency between enqueues and dequeues.

Of course, as a user of computer programs (it feels) from the start of time I have felt that response was more or less flowing. How many times have I cried out «why did this program have to wait for that one to complete before it could start»? However, some times it’s obvious that it would have to wait. But having spent so much of my professional career coding with threads or PROCs (here) I haven’t come across concurrency as an adjective. Is this because we have treated hard time with interrupts and then the rest is a piece of cake? Is it because I have been working with CSP-type processes and channels? But then, it wasn’t piece of cake: critical sections couldn’t be of any length; buffering from interrupt into the processes had to be in control. Maybe I just haven’t realised the literal adjective nature of concurrency, even if virtually all my technical blogs circle around the theme)

So, is degree of concurrency a trait of the weather or the climate? Is it possible to catch its meaning?

CSP-type channels and more or less concurrency

No degree of concurrency with occam?

Roger Shepherd (who wrote the microcode for the transputer, an occam machine, in the early 1980’ies, see Wiki-ref below) showed in an earlier blog note that it was possible run occam without use of atomics [4]. The HW had enough atomicity (huh, here I use atomic as an adjective!) to support what was required by the occam memory model. Occam did not have buffered channels, but it was easy with occam to implement a buffer process and place it between channel ends. And the language had many-to-one channels. As I have suggested in Not so blocking after all synchronous channels are as non-blocking as anything, with the right connotation of the blocking term. And with parallel slackness [5] together with the specification of the system like «the input handler shall always take inputs, at a max rate of 44.100 kHz» – and an implementation with CSP channels and processes that «implements specification» (to use CSPm phrasing), then we didn’t need to think about lock-free or wait-free-ness. We didn’t need to think about how concurrent the architecture was. The metric was to fulfill the specification, not to think about the colour of concurrency.

But – is this a delusion? Some would even say that occam was not a real-time language? If so, it’s in the same group as C or C++, which are used as real-time languages.

As an aside, see what’s in the Wikipedia article about Erlang. This would basically also cover CSP:

Though all concurrency is explicit in Erlang, processes communicate using message passing instead of shared variables, which removes the need for explicit locks (a locking scheme is still used internally by the VM[.])

JCSP™

JCSP is a Java library for Communicating Sequential Processes developed by University of Kent at Canterbury [6]. Version 1.0 was released in 1999 after some years of development. Last update is 1.1-rc4 and the front page is updated this year (2014). (Aside: I think the work was kicked off during a SIG at WuTUG-19 in 1996, where I did my share and tried to code the commstime program using the Java synchronized mechanism, which was a mess, here)

Does it relate to the degree of concurrency? Let me start by pasting some from the description. I delve into the channel that’s most like Go’s channel:

Any2AnyChannel is an interface for a channel which is safe for use by many reading and writing processes. Reading processes compete with each other to use the channel. Writing processes compete with each other to use the channel. Only one reader and one writer will actually be using the channel at any one time. This is managed by the channel – user processes just read from or write to it. … All reading processes and writing processes commit to the channel (i.e. may not back off). This means that the reading processes may not ALT on this channel.

But doesn’t this indicate that the paradigm isn’t concerned about degree of concurrency? When a process commits on a channel it means it’s ready to output. Stronger: it needs to output. Time can in fact stop for it: in a message sequence diagram of this type the arrows are non-sloping (see here). No matter how much the primitives below are atomic, blocking, lock-free or wait-free doesn’t make any difference. If a sender or receiver was first on the channel it will yield anyhow. It it’s second data is moved across (or a pointer or an access privilege). Isn’t this problem orthogonal to degree of concurrency, in the context of non-blocking or not?

However, in JCSP channels may be zero-buffered, fixed size blocking FIFO, fixed size overwrite-oldest-when-full, fixed size discard-when-full or infinite sized FIFO. Channels may be either one-to-one, one-to-any, any-to-one or any-to-any. Channel types – both the receiving side and the sending side may be part of an ALT. Exceptions exist, as we just saw. And channels may be bundled in arrays. But doesn’t this flora of channels toolkit show that the designers have been thinking about concurrency as an adjective? The toolkit is larger than occam’s. But would it mean that JCSP is more concurrent than occam? I wouldn’t think so because it’s not about the tools since probably any JCSP-built architecture could also be built with occam. Basically, Turing lives.

Now, how is the CSProcess built? This is rather important:

In this JCSP binding of the CSP model into Java, a process is an instance of a class implementing this CSProcess interface. Its actions are defined by the run method.

It’s straight on top of Java’s concurrency model. So, if JCSP might not relate to degree of concurrency – will a clean Java system have to relate to it? Or am I mixing matters up now, comparing apples and bananas? As I said above any application built with any tool will have to relate to its specification of reactiveness. I struggle: is it the Lego bricks or the structure I am discussing?

Architectural level

I assume that more or less concurrency makes sense. I will try to make some lists. I am certain that it would start a discussion, but I am not certain whether or when I am right. Again, much is referred to Not so blocking after all. Disclaimer: these lists are heavily influenced by the straw through which I see computer science. But it’s a way to try to expand that straw.

Has degree of concurrency

The higher degree of concurrency the better:

- A single-threaded application where one work task blocks progression of other tasks has less concurrency than one the never blocks (also related to how long)

- A multi-threaded application has more concurrency the more the number of thread relates to number of work tasks

- An implementation of a CSP systems does not need to do any busy-polling to build the primitives. So it is more concurrent than one system that uses busy polling

- Java has notifyAll which means that a Java thread may be notified just in case: there may not be anything important to be notified about. The system wouldn’t know. So it is less concurrent than one system that hits all the time

- SDL and some other message driven systems are not allowed to stop an event when the task is not really able to treat it, so it has to be stored with a save command. So it is less concurrent than systems where messages may be filtered in a different way

- occam and Go may stop listening on channels to keep disturbances out. So they are more concurrent than systems that can’t

- Erlang and core.async has ways to filter even on contents. So they are more concurrent than systems that can’t

- A global critical region to implement something is less concurrent than if only parts of the system was necessary to protect

- Not needing a critical region is more concurrent than the opposite

- A non-blocking type atomic variable where one is not 100% sure that an unprotected change was correct at first trial is less concurrent than one where one would be certain

- A system that has much caching will be less concurrent than one where the same problem is solved without caching (I am not talking about speed, only the degree to which one disturbs when it would be better not to disturb)

- A system that has stop-the-world garbage collectors (as in Go) is less concurrent than (..one that doesn’t use that kind of garbage collection?)

- A system that needs to tune its garbage collection (like Java) to get performance is less concurrent than one that (…) doesn’t use dynamic memory

- A system that doesn’t create garbage is more concurrent than one that does

- A serial line interrupt that delivers a character per interrupt is more concurrent than one that frames and delivers a full message. (Less time per interrupt and receiver has time to pick up each byte vs. more time spent in interrupt because scheduling of receiver (driver, process, thread, task) is rather late, so needs a framed message)

- Rewrite of some of the quotes above

- Clojure concurrent programming commute allows for more concurrency than ref-set. Using commute reduces the potential of a retry and thus allows more successful transactions in parallel => more concurrency

- Wait-free queues give more concurrency than lock-free queues

- A system without explicit locks at application level but requiring locks at lower level is more concurrent than one that has locks at application level (only) (Erlang quote)

- A non-blocking algorithm that avoids the contention caused by the snapshots of Prakash et al.’s algorithm allows more concurrency (Lamport, Valois)

Is it possible to make a diagram of floating circles (like http://arborjs.org) for these aspects?

Degree of concurrency is no issue

And here’s a list of what I think is orthogonal to degree of concurrency, where «more» or «less» concurrency is not interesting, no issue. I am also leaving parallel programs (=multi-core) out of this table. For most of the below the concept take no degreeness, but the implementation may. Also, the application may have degreeness. I assume that the below are implemented as concurrent systems:

- CSP with channels that are non-buffered (synchronous) or buffered (asynchronous until full) (the process algebra concept)

- Formally verified also with respect functional, but also temporal requirements (some blog here)

- Systems with deterministic guarantees with regard to response times, like rate-monotonic scheduling (hard real-time systems)

- State transitions systems (includes SDL and CSP)

- Rewrite of a quote from above

- The algorithm employs separate Head and Tail locks, to allow complete concurrency between enqueues and dequeues (Lamport, Valois) (Complete but with two locks!)

- …

Stay tuned. Or rather: help!!

Degree of concurrency is void

These properties must be removed by design, and have nothing at all to do with degree of concurrency since it in all cases requires functional systems. If any of them should be encountered it’s often fatal:

- The property of being able to not fulfill the specification

- The property of being able to deadlock

- The property of being able to livelock

- The property of being able to not meeting a deadline

Reactiveness and degrees of concurrency

I think I can conclude from the above that these are not terms covering the same facts of such systems. But then, they are not completely unequal either.

I think reactive systems mostly are built as systems where degree of concurrency is no issue (list two above).

A CPA 2015 fringe presentation based on this blog note

See Two CPA 2015 fringe presentations

XMOS and xCore

Update 12Nov2021. I just discovered that this note was written before I had had any deep encounter with XMOS. There would be so much to say about this here. But I have some here: My XMOS pages.

IEC 61508

Update 12Nov2021. Aside: plus IEC 61508 had not been mentioned. Had I written this again I would have mentioned it. It’s relevant.

References

Wiki-refs: Duracell Bunny, Erlang, Non-blocking algorithm, occam, rate-monotonic scheduling, state transition systems, transputer

- Clojure – Concurrent programming, see http://clojure.org/concurrent_programming

- Wait-free queues with multiple enqueuers and dequeuers by Kogan, Alex; Petrank, Erez. Proceedings of the 16th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming (PPOPP 2011). San Antonio, TX: ACM Press. pp. 223–234. ISBN 978-1-4503-0119-0. Buy from ACM. But I found it at http://www.cs.technion.ac.il/~erez/Papers/wfquque-ppopp.pdf

- Simple, fast, and practical non-blocking and blocking concurrent queue algorithms by Maged M. Michael and Michael L. Scott. Proceeding PODC ’96 Proceedings of the fifteenth annual ACM symposium on Principles of distributed computing. Read at http://www.cs.rochester.edu/~scott/papers/1996_PODC_queues.pdf

- Atomic operations and the transputer, © Roger Shepherd, 2014, in «Atomic for all?» at https://www.teigfam.net/oyvind/home/technology/090-atomic-for-all/#atomic_operations_and_the_transputer

- Parallel slackness, see http://wotug.org/parallel/acronyms/hpccgloss/P.html#parallel%20slackness and http://ww2.cs.mu.oz.au/~aaron/subjects/comp90025_2011_sm2/lectures/node195.html

- Communicating Sequential Processes for Java™ (JCSP), see http://www.cs.kent.ac.uk/projects/ofa/jcsp/

- Duracell Bunny (duracell rabbit, duracellkanin)

2-6: Pictures from an animation at www.fxguide.com/featured/bunny_power/ called ‘Bunny Fusion’, a Duracell Ultra spot, made by visual effects (VFX) company DIGITAL DISTRICT™ at www.digital-district.fr/en/home in Paris, directed by Pleix via Ogilvy. Thanks to DIGITAL DISTRICT for allowing me to use my bluntly made figure 1!