Contents

- 1 Limited selfrefs

- 2 Intro

- 3 Not the first to be included

- 4 IncludeOS is unikernel

- 5 IncludeOS is single-tasking

- 6 IncludeOS is resource efficient

- 7 IncludeOS is designed for cloud services

- 8 IncludeOS is designed for virtualized environments

- 9 Concurrency with MirageOS

- 10 Final remarks

- 11 Appendix: Excerpt from a mail conversation with the designer

- 12 References

New: 5Oct2016, updated 11Oct2016 (Stack Overflow)

This page is in group Technology and I try to study the IncludeOS with respect to mult-threadedness or even concurrency

Limited selfrefs

|

Intro

Update (5Oct2016): I have had a long mail conversation with the IncludeOS architect Alfred Bratterud. I have been allowed to quote him. See Appendix: Excerpt from a mail conversation with the designer.

Not the first to be included

This note was triggered by an article in a Norwegian paper [1] about a new (Norwegian, cool) operating system called IncludeOS. What surprised me when I started to read about it was that it intriguingly takes its name from the way that the embedded world has always done it. To use IncludeOS just put something like #include <os> in your source file and the linker takes care of the rest. The operating system is also properly called a library OS in the documentation (more below).

Personally saw this first in the eighties when we used the Intel PL/M language plus purchased run-time system for MCS-85, Texas Instruments Microprocessor Pascal (MPP Pascal) with built-in scheduler for TMS-9995 and TMS-99105 and Modula-2 with purchased plugged-in real-time kernel for MCS-85. I also wrote a Real-time executive for 8051-type single-chip microcomputers in the early eighties. Of course, the linkers only linked what was used.

Some years later the transputer processor arrived. This made it possible not even to include the OS, it was already there in the processor’s silicon, microcode and instruction set. The occam programming language ran directly on transputers, which had an architecture designed to be an occam machine. And at the moment I work with the XC language running on XMOS processors, same way as with the transputer. For these not even #include is needed. The OS just is simply built into the hardware.

Even after reading myself up on IncludeOS [2], [4] and [5] I need to discuss it further. The name is only the start. It’s interesting to learn about a solution that according to the main scripture (IncludeOS: A minimal, resource efficient unikernel for cloud services [3]) is so great, but many of the answers are quite contrary to many of my own blog notes.

IncludeOS is unikernel

From Wikipedia I read about unikernel

Unikernels are specialised, single address space machine images constructed by using library operating systems. A developer selects, from a modular stack, the minimal set of libraries which correspond to the OS constructs required for their application to run. These libraries are then compiled with the application and configuration code to build sealed, fixed-purpose images (unikernels) which run directly on a hypervisor or hardware without an intervening OS such as Linux or Windows. (27Sept2016)

This is fine. The articles lists our OS like this:

IncludeOS is a minimal, service oriented, open source, includable library operating system for cloud services. Currently a research project for running C++ code on virtual hardware. (27Sept2016)

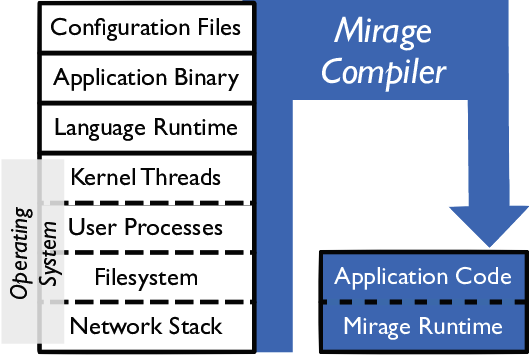

There is nothing about tasking or threading in the same page except for the figure (embedded above). Still [3] connects the unikernel and single-tasking terms, perhaps for some reason I don’t comprehend. In my head «Application code» should have some threading.

IncludeOS is single-tasking

IncludeOS is single-tasking. However, most any system needs to do several things simultaneously or concurrently. Even here. Stay tuned. The executable user functionality code is run by a callback function, one per action. Probably all code consist of callback functions, drivers or components of some sort. Personally I am used to scheduling of a light process, not based on a callbacks and event loop. With no need for any busy-poll or added microamps anywhere in the run-time. And the scheduling is just a parameter-less call with a single line of code to assign process context, so very low overhead.

(This paragraph contains a lot of pastings from [3])

I have studied all places where thread and process are mentioned in [3] and it’s almost clear that IncludeOS doesn’t directly support any. I think this perhaps sums it best: «With the proliferation of virtualization technologies the idea of single tasking operating systems have regained relevance, and there has been a surge of activity to find the best adaptation for the context of cloud computing.» However, this «Other solutions are limited to partial virtualization relying on the host to provide abstractions, such as threads and drivers» may hint that IncludeOS does provide those abstractions. But here «While existing network stacks were considered to be ported into IncludeOS, other implementations are usually tightly entangled with the operating systems to which they belong, relying heavily on local conceptions of drivers, threads, modules etc.» again takes me back. As would «While any general purpose operating system has to use hardware supported memory protection to ensure integrity among processes, a single-tasking operating system can chose (SIC) to disable these features.» However, at the layer of the «Qemu process, emulating the virtual hardware» and «Qemu process has to do a bit more work to run an IncludeOS VM, as opposed to a Linux VM» I read that it’s now IncludeOS that runs as a process with the Qemu HW layer as the other. It tells me that no, there is no threading on IncludeOS.

However, the line between single and threaded is fine. I assume also between single and tasking. I fail to see why they use the word tasking, but as I read it the line between tasking and threading seems even finer. This means that single-threaded or single-tasking isn’t as single-minded as one is mislead to think. (Disclaimer: provided their use of the word «single» should not be connected to single address space.)

Why they seem to lock the programmer into a event loop & callback methodology and still call it an OS I fail to understand. The Clojure language runs on the Java virtual machine, Common Language Runtime and JavaScript engines. There is a library called library core.async that adds support for asynchronous programming using channels. The man behind these is Rich Hickey. He has made the best presentation I have seen (and I have seen many at CPA/WoTUG conferences!) that takes us from callbacks and event loops to proper processes with channels. So he discusses the motivation, design and use of the Clojure core.async library. [6]

IncludeOS is resource efficient

The authors state that an interrupt (kind of HW threading from the sixties) doesn’t (in the present implementation) do its thing in the interrupt but sets a bit that’s picked up by the main event loop where the processor often sleeps (on no power) where it is awoken by the interrupt. Sleeping on microamps at this point is in my world standard procedure.

Another thing is the use or not use of busy polling. This means that a SW concurrent process (or callback function, for that sake) has to come back and check something. If the SW sends on a Linux pipe or into a buffer that’s allowed to get full («allowed to», as if «send and forget» – that one pretends that a buffer can’t get full – is a solution for any good SW. If you have a fast producer and a slow consumer, then the buffers will eventually overflow. Now, should such a natural event be pathologic and restart the system? In a safety critical system governed by IEC 61508 the easiest answer to tell an assessor is «no»). Now, sending on a Linux pipe that’s full and the send call returns a bit telling that it is full? Now, what does a system based on asynchronous sending do? It has to poll to see when the pipe is ready to receive more data. This polling draws microamps or milliamphours, i.e. it is expensive.

Instead of the polling one could register a callback. But is there a general mechanism for the function that should then call that callback?

It’s also like with Java’s Notifyall. Several Threads will be scheduled and notified for no really good reason. Per Brinch Hansen criticised this heavily [7]. There’s something with the Java’s solution that’s wrong. Besides it draws more current than necessary.

It’s like touting that asynchronous systems are so great and that it must be so to become really responsive and make up highly reactive systems, a point that shines through in [3]. Wrong. Asynchronous or synchronous are orthogonal to responsiveness. They are both necessary tools. But pathological blocking in one world, in another world it is just necessary waiting for a not ready resource, not holding up any other functionality. Provided a process, function or callback function does what it should do, and nothing more. It’s not correct to let a function blink a LED ten times per second and at the same time let it start a service that might come back with its result, usually within 50 ms but some time after as late as one second. But if you have low support for threading/concurrency then asynchronous is the solution and «Blocking code is not good for the universe» (Herb Sutter [14]).

With CSP (Communicating Sequential Processes), processes with channels in between (now also the basis of the concurrency in Go/Golang) you’d naturally make one process for each service, or for completely sequential or orthogonal services, to keep cohesion high (enough). When we made a run-time system for this the other guy started with lots of busy-poll functions. I told him that in such a system we never, never need to make busy-polling [8]. Not ever having a need in the scheduler to do busy-polling means less power consumption. The channel drives the scheduling.

It’s also with non-blocking variables, using and opportunistic thinking. Take a copy of it, modify it and then come back and see if some other concurrent process has updated it in the meantime. Most of the time it’s ok. If not, try again. Or start all over again, to see whether the other’s update was of any concern for you. This trying again is some kind of busy-poll.

Why IncludeOS makes such a big number of low power, when it at the same time advocates asynchronous programming and callback functions seems somewhat strange to me. It’s not enough to me that they’re better than the other OSes running on top of a virtualized environment. Please explain.

The IncludeOS paper [3] also talks about system calls. IncludeOS does this through RedHat’s newlib library. (I used its precursor, the Cygnus library for years on my PC at work.) IncludeOS haven’t described how potentially blocking system calls would be handled (EWOULDBLOCK exists). I think there would be polling to pick up a such a call. Newlib is, according to Wikipedia, a «C Standard Library» which then should not contain Posix. But there’s a lot mention of Posix and threads in the Newlib documentation [12]. As I read the IncludeOS documentation it would be strange indeed if it contained threads.

Newlib also has reentrant system subroutines. Here’s how [3] summarises this:

No system call overhead; the OS and the service are the same binary, and system calls are simple function calls, without passing any memory protection barriers

It doesn’t say anything about blocking calls.

I assume that this is an opposite: The Go/Golang runtime system runs on top of some host OS. In the case of blocking syscalls, this is what happens:

In the case of blocking syscalls, the Go scheduler will automatically dispatch a new OS thread (the time limit to consider a syscall «blocking» has been 20us), and since non-network IO is a series of blocking syscalls, it will almost always be assigned to a dedicated OS thread. Since Go already uses an M:N threading model, the user is usually unaware of the underlying scheduler choices, and can write the program the same as if the runtime used asynchronous IO. [11]

This may be out of scope here. I included it simply to show it. As are these two papers:

In The Problem with Threads by Edward A. Lee [9] he argues that threading is too difficult, and in Threads Cannot be Implemented as a Library by Hans-J Boehm [10] he basically argues that C with PThreads is difficult. In the span between these two, where Go goroutines answer both side’s arguments quite well. IncludeOS should come in and actually offer a «better» (in my opinion) process/threading model than callbacks and event loops.

Disclaimer: I have only read [3] which doesn’t go very much in detail on this. I’d rather hope for IncludeOS users that I am wrong. If so I will edit this note.

IncludeOS is designed for cloud services

Cloud services these days seem to run on virtualized environments. This means that Linux and Linux and Linux and whatever other operating system, like IncludeOS share the same machine. I do a variant of this at home, where I let macOS run Parallels that runs Windows 10 (and later Chrome OS). The latter simply because I don’t want to fall to much behind with Microsoft in my mostly Mac world. There’s something called a hypevisor here. From Wikipedia:

A hypervisor or virtual machine monitor (VMM) is a piece of computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems. Multiple instances of a variety of operating systems may share the virtualized hardware resources: for example, Linux, Windows, and OS X instances can all run on a single physical x86 machine. (30Dec2016)

I am not certain if the OS X (now macOS) statement above is correct. But I assume that IncludeOS could have been in that list.

IncludeOS is designed for virtualized environments

Why doesn’t the virtualizing layer define a minimal set of concurrency features? Or does it?

Concurrency with MirageOS

[3] mentions MirageOS. It says that «IncludeOS has many features in common with Mirage; it is single tasking, it draws only the required OS functionality from an OS library, and links these parts together with a service, to form a bootable virtual machine image, also called an appliance.» However, as is also mentioned is [3], MirageOS runs below the OCaml language that indeed supports concurrency (threading, tasking): In [13] we read that:MirageOS is a library operating system that constructs unikernels for secure, high-performance network applications across a variety of cloud computing and mobile platforms. Code can be developed on a normal OS such as Linux or MacOS X, and then compiled into a fully-standalone, specialised unikernel that runs under the Xen hypervisor.

Since Xen powers most public cloud computing infrastructure such as Amazon EC2 or Rackspace, this lets your servers run more cheaply, securely and with finer control than with a full software stack.

MirageOS uses the OCaml language, with libraries that provide networking, storage and concurrency support that work under Unix during development, but become operating system drivers when being compiled for production deployment. The framework is fully event-driven, with no support for preemptive threading.

So, even if MirageOS is single tasking that application programmer has available more real concurrency than the callback functions only of IncludeOS offer.

Final remarks

Even if even JavaScript is without real threading, somebody must tell me why an OS provides only callbacks, and when «it is it» – is enough. Language designers seem to think processes are difficult. In my experience (from embedded) it isn’t. Provided I have good abstractions for it. Being single-tasking/threading and asynchronous are sold in these days. Obviously I am not in the target audience.

But there is still hope

On the IncludeOS GitHub page: https://github.com/hioa-cs/IncludeOS/wiki/FAQ#why-no-threads (also [16]) I read through the lines that they seem to think that their threading model should be like Posix threads’ little brother: complex. But there is hope. They end by saying that «There are also a lot of interesting alternatives to threads that we’re currently looking into.» Great! How about a CSP variant? I only hope it’s not too late not to add processes as an afterthought. For they should become primary citizens.

Disclaimer: please advice me, either by comment here or a mail to me. I’d like to hear from the IncludeOS designers and users. (Update, see below). For me it’s a paper, of course allowed to be studied. I’d be happy to rephrase!

Appendix: Excerpt from a mail conversation with the designer

(7Oct2016) I have had a long mail conversation with the IncludeOS architect Alfred Bratterud. I have been allowed to quote him, but I will make it short. You should go to their pages for news.

Bratterud gave me these papers – [AB1], [AB2], [AB3] and [AB4] – and explained his context. What he basically said was that what I had read out of [3] was perhaps coloured by the fact that this was one of his earlier papers on IncludeOS. (So there I surely miss an IncludeOS White Paper. What it is and what do they think about the future?) At least now he didn’t feel like the concept of callbacks and event loops was any panacea, also, no panacea for IncludeOS. But he did recognise that for certain applications, especially virtualization, that methodology often was fastest. I quoted him in the «but there is still hope» chapter above and he confirmed that yes, some kind of threading or support for processes was coming. To me the (deliberately made red by me) [AB3] is particularly interesting in this context.

(10Oct2016) I now learn that the IncludeOS FAQ [16] has been updated, in the «Why no threads?» chapter, with «In particular we like the upcoming C++ coroutines, and how you can build Go-like channels on top of them.» – referring to [AB3]. I can’t wait to see in which direction IncludeOS is going!

I did listen to a good C++ speech at TDC2015 but I can’t recollect that there was anything there about coroutines. And I obviously don’t seem to follow C++ close enough. But [AB3] mentions them. So, this was new to me!

(Aside: I added a reference about C++ coroutines, see [17]; plus the starting C++ document «Wording for Coroutines» [18] (taken from [AB3]). There they add the keywords co_await, co_yield, and co_return plus the test macro __cpp_coroutines. The coroutine is built on top of generator, future and promise with yield. A document discussing this is [19], with the great subtitle «a negative overhead abstraction». My comment, after studying some of this is that you’d have to be a C++ high priest to think it’s simple. I know from my occam years how its designer succeeded with the opposite.)

We used Modula 2 with coroutines at work in 1988 and shipped one of our best products with it (the Autronica BS-100 fire panel) – and we used occam (compared to the above it’s bare bone and so simple) with channels on the occam-machines called transputers by Inmos in the early nineties (shipped on Autronica NK-100 engine condition monitoring system «MIP calculator»). Development of ideas isn’t linear in any way. Sometimes new generations have to discover things themselves. The original wrapping was perhaps too confined. And when they do it seems to be in some disguise from the original, but some times revealing a more practical stance to the problem. I look forwards to this!

- Diagnosing Virtualization Overhead for Multi-threaded Computation on Multicore Platforms by Xiaoning Ding and Jianchen Shan. Publisged in Cloud Computing Technology and Science (CloudCom), 2015 IEEE 7th International Conference on, see http://ieeexplore.ieee.org/document/7396161/

- APLE: Addressing Lock Holder Preemption Problem with High Efficiency, by Jianchen Shan, Xiaoning Ding and Narain Gehanisee.In IEEE Clodcom, see https://www.computer.org/csdl/proceedings/cloudcom/2015/9560/00/9560a242.pdf

- Channels – An Alternative to Callbacks and Futures, by John Brandelam, at CppCon 2016, see https://github.com/CppCon/CppCon2016/blob/master/Presentations/Channels%20-%20An%20Alternative%20to%20Callbacks%20and%20Futures/Channels%20-%20An%20Alternative%20to%20Callbacks%20and%20Futures%20-%20John%20Bandela%20-%20CppCon%202016.pdf (points to [18] and [19])

- Adopting Microservices at Netflix: Lessons for Architectural Design by Tony Mauro, see https://www.nginx.com/blog/microservices-at-netflix-architectural-best-practices/

References

Wiki-refs: C Standard Library, Clojure, Coroutine, Critisism of Java, DOM (Document Object Model), Hypervisor, Modula 2, Newlib, Non-blocking algorithm, occam, Unikernel

- Nå er det norske operativsystemet IncludeOS klar til bruk by Harald Bromback @ digi.no, see http://www.digi.no/artikler/na-er-det-norske-operativsystemet-includeos-klar-til-bruk/358515 (in Norwegian)

- IncludeOS, see http://www.includeos.org/

- IncludeOS: A minimal, resource efficient unikernel for cloud services by Alfred Bratterud, Alf-Andre Walla, Hårek Haugerud, Paal E. Engelstad, Kyrre Begnum Dept. of Computer Science Oslo and Akershus University College of Applied Sciences Oslo, Norway, see https://github.com/hioa-cs/IncludeOS/blob/master/doc/papers/IncludeOS_IEEE_CloudCom2015_PREPRINT.pdf

Presented at http://2015.cloudcom.org/ (2015) - IncludeOS: Run cloud applications with less by Serdar Yegulalp @ InfoWorld, see http://www.infoworld.com/article/3011086/cloud-computing/includeos-run-cloud-applications-with-less.html

- #Include <os>: from bootloader to REST API with the new C++ by Alfred Bratterud CEO, IncludeOS @ CppCon 2016, see https://cppcon2016.sched.org/event/7nLe?iframe=no

- Clojure core.async, lecture (45 mins). Rich Hickey discusses the motivation, design and use of the Clojure core.async library. See http://www.infoq.com/presentations/clojure-core-async. Additionally you can download the soundtrack from this as an mp3. (Also some about this in my notes. Search for Hickey)

- Java’s Insecure Paralleism by Per Brinch Hansen (1999), see http://brinch-hansen.net/papers/1999b.pdf. Since I am on the occam discussion group, I was lucky to read this letter before he published it. He initially sent it there

- New ALT for Application Timers and Synchronisation Point Scheduling, Øyvind Teig (myself) and Per Johan Vannebo, Communicating Process Architectures 2009 (WoTUG-32), IOS Press, 2009 (135-144), ISBN 978-1-60750-065-0, see http://www.teigfam.net/oyvind/pub/pub_details.html#NewALT

- The Problem with Threads by Edward A. Lee. Electrical Engineering and Computer Sciences, University of California at Berkeley, Technical Report No. UCB/EECS-2006-1, January 10, 2006, IEEE Computer 39(5):33-42, May 2006, see http://www.eecs.berkeley.edu/Pubs/TechRpts/2006/EECS-2006-1.html. I have discussed this in a separate blog note.

- Threads Cannot be Implemented as a Library by Hans-J Boehm. HPL-2004-209, see http://www.hpl.hp.com/techreports/2004/HPL-2004-209.html

- golang: how to handle blocking tasks optimally? see http://stackoverflow.com/questions/32452610/golang-how-to-handle-blocking-tasks-optimally

- The Red Hat newlib C Library (System Calls), see https://www.sourceware.org/newlib/libc.html#Syscalls

- MirageOS, see https://mirage.io/

- Pike & Sutter: Concurrency vs. concurrency (one of my blog notes), see https://www.teigfam.net/oyvind/home/technology/072-pike-sutter-concurrency-vs-concurrency/

- CSP on Node.js and ClojureScript by JavaScript, (another of my blog notes), see CSP on Node.js and ClojureScript by JavaScript

- Why no threads? by IncludeOS, see https://github.com/hioa-cs/IncludeOS/wiki/FAQ#why-no-threads

- Windows with C++ – Coroutines in Visual C++ 2015 by Benny Kerr. In Microsoft Magazine Oct2015, see https://msdn.microsoft.com/en-us/magazine/mt573711.aspx

- Wording for Coroutines (document C++ P0057R5) by Gor Nishanov, Jens Maurer, Richard Smith and Daveed Vandevoorde, see http://www.open-std.org/jtc1/sc22/wg21/docs/papers/2016/p0057r5.pdf (What is «suspension context of a function (5.3.8)?)». Obvious answer at Stack Overflow (=it’s defined in the paper!))

- C++ Coroutines, a negative overhead abstraction by Gor Nishanov at CppCon 2015, see https://github.com/CppCon/CppCon2015/blob/master/Presentations/C%2B%2B%20Coroutines/C%2B%2B%20Coroutines%20-%20Gor%20Nishanov%20-%20CppCon%202015.pdf