1Sep2013 – updated 19Feb2015

This page is in group Technology.

| This is a small contribution for software programmers who do concurrent programming. It is a naïve follow-up from a previous blog note: Pike & Sutter: Concurrency vs. Concurrency, meant to install a gut feeling of when blocking is not evil. Looking back I have discovered some milestones in my own career. |

1978

When I first started writing concurrent software for embedded microcontrollers it was on an Intel Prompt 48. My boss would not allow me to buy the Intel Intellec «blue box» with a proper compiler. So I wrote all in assembly language for the MCS-48 processor. I had 1024 bytes of memory for the program, and I had to do paging for every 256 bytes. I had to calculate all addresses by hand. Inserting new code had me relocate a lot.

We used this in an Uninterruptible Power Supply (UPS), used to assure continuous power for relay stations on mountain tops in Norway. The unit read 230V AC power voltage «from the valley». After power loss or bad frequency a diesel engine was started after an initial delay while the battery delivered power; with max three start retrials. If it started ok then the generator delivered alternative power that was rectified to charge the battery bank. All voltage levels were read with successive approximation conversion (it just takes an op amp comparator and some pins on the processor) – as we didn’t have any A/D-converter. All comparisons of voltages had hysteresis. All compare levels as well as delay times could be modified with potentiometers, with a measuring output per potmeter for a handheld meter. 2.55 Volt on the potmeter was 255 AC Volt, 3.0 Volt on another potmeter was 30 seconds, and on another 30 minutes. This, in addition to status LED outputs, was the man machine interface. The unit controlled some relays and contactors.

We delivered quite a few of these units. They were in use for at least 10 years before they were modified, and some more years before they (probably) were obsoleted.

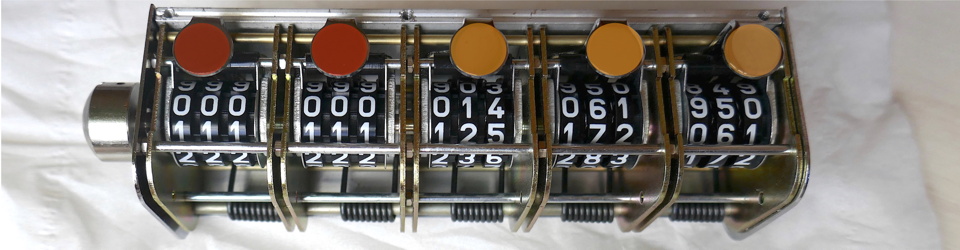

All this was controlled from two programs inside the 1024 bytes of code space. After the power-up main to initialise, then on every timer tick (I think once per 100 ms) the interrupt ran. There were state and counters for the functions, each had to fit in 8 bits. At every state of the timer interrupt something was done. But anything that needed to wait for something was not allowed to loop around.

There was no blocking. If we had had blocking, we could not have got the job done. The single sw interrupt «process» had all: no composition into several «mains». Just the one. Just a single block – not much to play with.

1988

After this we used the following programming systems for the larger processor systems (like up to or even above 64 KB), we used: (1) Intel PL/M language plus purchased run-time system for MCS-85, (2) Texas Instruments Microprocessor Pascal (MPP Pascal) with built-in scheduler for TMS-9995 and TMS-99105 and (3) Modula-2 with purchased plugged-in real-time kernel for MCS-85.

The smaller parts of the products lived a parallel life, based on MCS-48 and MCS-51 processors. I mostly worked with them. Some used the timer interrupt driven system (plus serial line handling) described in the 1978 chapter above. One used a scheduler I wrote in assembly language for MCS-48.

Based on this I wrote a scheduler in PL/M-51 for the MCS-51 processor. I still have the description, see [02]. Looking at it now I see how the scarce resources drove the design. It basically was a system with four small «processes» that communicated via a mailbox for each process. There were timeouts, several interrupts and in fact timed polling. It was far from CSP with its polling for internal events (not necessary for a CSP implementation), but the mailbox was channel like (for CSP, also start at the previous blog note [01]). The mailbox had one sender and and receiver, and it was blocking. The sender did not fill the mailbox before it had been emptied by the receiver. There also was protection from context switches by the system timer or interrupt if the stack pointer was one or more above process level. So just calling a subroutine prohibited context switch and thus kept registers safe. So, no semaphors.

This simple system used rudimentary composition: four software processes plus interrupts. It also used blocking communication and synchronization. No new data was produced before the old had been read by the consumer, also rather rudimentary – this time to avoid overflow.

Even if they were in an obsoleted product, a huge amount of these have not been taken out of use – still controlling loops of fire detectors – from hotels to offshore, cruise ships to nuclear stations. The sw was sent to Japan to be mask programmed two times, the last version in 1990. It was produced for some 15-20 years.

1993

At this time we had started looking at transputers. I learnt that they were programmed in a new language called occam 2. In 1989 I went to a week long course arranged by Inmos in Bristol in the UK. These microprocessors had four high speed links and were «occam machines» – or even more precisely: «subset-of-CSP machines». I had worked with the Texas TMS 9995 and TMS 99105 processors with their register banks and CRU serial lines used for any type of interconnect, with this MPP Pascal (mentioned above) designed from ideas by Per Brinch Hansen. MPP Pascal «knew» what a process was. So did the transputers, even the hardware. The transputer designers said that this Texas architecture was the one that had influenced them the most, even if I have not heard this from David May himself. This was at the time all new to me. At that course I was introduced to the fully folded TDS development system that could program and download communicating parallel programs on any number of transputers. The debugger could move «down a channel», even if the channel end was on another processor. I was changed for life.

A process was cheap: fast context switches – less than a microsecond. In the examples, sorting was a parallel sieve; a buffer pool was a series of chained processes; an overflow buffer was explicitly programmed to have control of losing data – just add a feedback buffer element in front of a pipeline of buffer element processes.

When I came back to work again we were allowed to start working with transputers. We started with T222 16 bits controller and went up to the T805 32 bits machine with floating point. They could run the same source code. We used them in several products over almost ten years, like a distributed real-time system to measure data from diesel engines on board ships, and calculate such parameters as delivered power (in MegaWatt!).

In this world I learned to use lots of software processes, to have parallel slackness. I designed the systems with process / data flow diagrams, I used lots of composition. I learnt to add buffering where needed and to appreciate the fact that blocking, synchronization and communication took place for every completed data transfer. I learnt that blocking a process still keeps the processor running, doing other things, as long as there is more to do. (Update: see Not so blocking after all). And I learnt that if some of the connected processes had a communication cycle, it’s called deadlock. It can stop a happy year long whirl and gradually freeze the whole lake. But I also learnt how to break such cycles by design.

These occam processes were the true block play for blockers, who flocked to the transputer conferences at that time.

Now

If you have a multiprocess system (not really multithread, which implies common sharing inside a process ) that is «multi» (several or more processes), then blocking is more than just OK. It is a solid block play tool that may help you build structures that won’t fall. Blocking is not bad or evil. It’s good. Provided you do parallel decomposition (yes, that’s what I have been talking about) with processses, as in Process Oriented Programming. And this also goes for communicating state machines. If you only have non-runnable objects, as in Object Oriented Programming, it’s ok to think blocking is evil – and that singlethreading is the path (nginx and Node.js are built on this thinking).

But as for multiprocess you need a good process model. A language or a library or a scehduler. But I have a feeling that an operating system process might be rather expensive. I have written so much about this (papers and blog notes, start in the menu above) that I will only give a tip or two here.

Search the internet for CSP or chan or channel and combine that with your favourite language. See what you find. Also, check out Go, and perhaps even occam. A multithreaded library may even be an ok starting point, not contrary to what I said above.

| Lastly, my XCHAN idea tries to combine asynchronous and synchronous, non-blocking and blocking communication in a safe way [03]. |

If you have questions or comments, please go ahead. If you want to contact me personally, I am here.

References :-:

This time all the references are to my own work…

- Pike & Sutter: Concurrency vs. Concurrency (a previous blog note)

- «Real-time executive for 8051-type single-chip microcomputers«, see http://www.teigfam.net/oyvind/pub/pub_abstracts.html#RTX-51

- «XCHANs: Notes on a New Channel Type«, see http://www.teigfam.net/oyvind/pub/pub_details.html#XCHAN