This text is a comment to Øyvind Teig’s blog post at http://oyvteig.blogspot.com/2009/02/007-synchronous-and-asynchronous.html

Unmodified comment (however a larger version of the drawing has been used) by Ron Hackler, received as email, Dec.30th, 2009:

IEEE/Teig Notes

The following is an attempt to clarify what appears to be the main points of your blog]. Towards this end, the following papers are recommended:

[CSER] Universal Systems Language for Preventative Systems Engineering, and

[INCOSE] A Formal Universal Systems Semantics for SysML

They can be downloaded from the Articles section at our website:

USL is a systems language independent of a particular implementation. With USL a functional architecture is defined with Type Maps (TMaps) and Function Maps (FMaps) to specify functionally what the system does. This means a user does not have to define functions used to implement communication between processes (e.g., synchronous and asynchronous send and receive functions); that is, communication is fully transparent to the user.

The same USL Functional Architecture can be mapped onto alternative implementations: one may exhibit sequential and the other parallel behavior. In Concurrent Communicating Processes (CSP), the purpose of interleaving two processes with P1 ||| P2 is to model concurrent processes that do not communicate. As noted in [CSER], the USL Include structure supports the possibility of concurrent operational behavior, but does not require it. This depends on how it is resource allocated. For example, the Include primitive control structure of an FMap simply denotes independence between the children functions; both, in that they do not communicate and that they are independently controlled by their common parent. In an implementation, the Include structure could be implemented with sequential behavior (using one resource to do two independent functions, one at a time, or two resources, each performing one of the two independent functions) or with parallel behavior (on two concurrent resources, each performing one of the two independent functions).

Note, that for any of the process algebras (CSP, CCS or LOTOS) "parallelism" is a misnomer (see Receptive Process Theory); none of the process algebras support "true" concurrency, only interleaving, see receptive process theory paper:

A Comparative Introduction to CSP, CCS and

LOTOS,

Colin Fidge, Software Verification Research

Centre Department of Computer Science, The University of Queensland, Queensland

4072, Australia, January 1994

With USL, the Functional Architecture is defined as a resource transparent specification, that is, it abstracts the concepts of "parallelism" and "concurrency" to that of "independence". Parallelism and concurrency only occur when a Functional Architecture has been allocated to a Resource Architecture that provides for those behaviors. For example, parallelism exists in a USL System Architecture when an FMap family (a parent and its children) in a Functional Architecture that is defined with an Include structure is allocated to an FMap family in a Resource Architecture that is also defined with an Include structure.

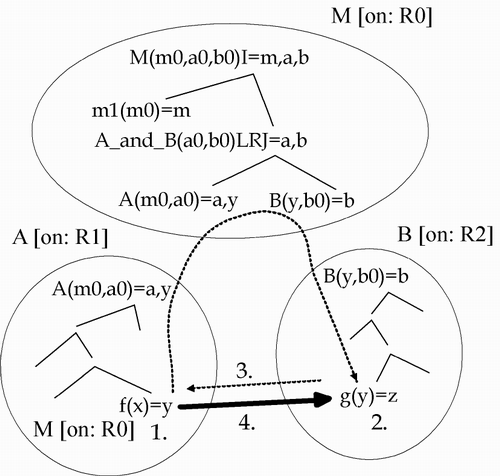

The model for mapping a functional architecture onto a resource architecture can be conceptualized as a process of drawing circles around portions of the Functional Architecture (e.g., around different parts of the FMaps), see Figure 1 in following insert. The syntax in this figure is left to right, function(input)=output. An intermediate function which is at the boundary of two circles, one above and one below, is split into two functions in the implementation/resource architecture: one at the leaf node as a function in the upper resource circle and the other as a top node function in the lower resource circle. The inputs to the leaf function in the upper resource are shipped all together (with a synchronizing mechanism) or separately, asynchronously, across some communication channel (e.g., TCP/IP) to the receiving resource in the lower circle. The sending resource asynchronously continues doing other things until the receiving resource ships the output results back (either all at once or independently one at a time, asynchronously, when they become ready). When the upper resource notices the results (or parts of the results) have been returned (i.e., as output to its leaf node function), it will use these values as needed by its own activities (all of which are uniquely prioritized) or send them on to other resources in its neighborhood.

USL Distributed Event

Driven Protocol

The event driven character

of a variable in a USL system may be separated into two aspects, its event

(control) aspect and value (data) aspect.

When a variable (i.e., the state of an object) receives its object as a

value, the event aspect ripples through the system of controllers each of which

in turn makes appropriate new lines of control. Having this separation is the key to a distributed event

driven communication protocol (see Figure 1). When objects are passed from one resource to another (e.g.,

y from f of A on R1 to g of B on R2) this protocol is used to coordinate the

dXecutors (here, R0, R1 and R2) involved in the transmission of an object's

contents (e.g., the contents of y).

Following is an outline of the steps involved in the distributed

protocol:

1. When y is produced, it is packed

for transport. A control message

with A:f(x)=y on R1 as the shipper is sent to y's destination via lines of

control which manage the activation of y as an event. The control message travels out of A on R1 into M on R0,

then into B on R2. Control lines

are activated as needed to manage y's event propagation.

2. When B: g(y) on R2 receives the

control message, space for y's data is allocated and its size is set.

3. A reply message is sent directly to

the shipper (i.e., A: f(x)=y) to initiate data transfer directly to B: g(y) as

the destination.

4. When all of y's data has been put

into the network, then the control line managing f(x)=y in A is released. As data arrives at B:g(y), data is

stored directly in y's pre allocated space. As data is taken out of the network, the size of data to be

received is decremented. When size

== 0, then all network data has arrived and y as an event at g is

completed. At this point, g(y)

becomes ready. If g is the highest

priority action of the ready set of actions, then R2 begins processing g.

Figure

1: USL

Distributed Event Driven Protocol

This conceptual model has been implemented in a 001 tool suite executive component, the Distributed Xecutor, DXecutor. In this implementation, each resource circle is mapped onto a DXecutor process. Each DXecutor cooperates with its neighboring DXecutors (its parent and children DXecutors, each of which may be on the same cpu or a different cpu) to implement the Functional Architecture. Each DXecutor, as part of a distributed system of DXecutors, interprets its FMap functions from the Functional Architecture. If the highest priority function being interpreted is allocated to a neighboring DXecutor, the pair of DXecutors coordinate by sending and receiving communications using a standard protocol to initiate actions, data transfer and de-instantiate actions. Communication is mapped onto TCP/IP sockets. Each variable within the functional architecture is split into two parts, a control part and a data part. The control part is used to activate local functions or to signal other DXecutors that a variable in their interface now has a value. As described in Figure 1, the DXecutor hosting the target function (really an action) ships a ready to receive data to the DXecutor hosting the function producing the data for an output variable (i.e., the same variable from which the control portion came from). In this model, the user never has to put communication send and receive statements, synchronous or asynchronous, in their specifications, since the DXecutor handles all of this generically. This is possible because of the semantics of USL. For example, with USL, a variable is fully traceable and all children are asynchronously managed by their parent function. Synchronous behavior is defined in a USL FMap using primitive operations that set the time a variable or a set of variables will become ready. In addition, a special set of primitive functions are used to determine the existence of an object state for a variable, allowing for user defined interrupting behaviors.

We hope that the comments above have clarified what is meant by asynchronous in regard to USL. Further comments are interspersed, using [HTI] and [/HTI], within your blog below.

007 - Synchronous and asynchronous

Intro

and quotes

[1] caused me to write this post because I stalled at some of the paragraphs. The authors hit a chord in my favourite mantra - a computer programmer should be fluent at both asynchronous and synchronous systems, and know the pros and cons, necessities and unnecessities of both. I guess that I in this post will try to outline what systems means in this context.

I will start with the quotes from [1]:

[1.1] - "The flexibility required of this missions could not have been accomplished in real time without an asynchronous, multiprogramming operating system where higher priority processes interrupt lower priority processes."

[1.2] - "To our surprise, changing from a synchronous OS used in unmanned missions to an asynchronous OS in manned missions supported asynchronous development of the flight software as well."

[1.3] - "Lessons learned from this effort continue today: Systems are asynchronous, distributed, and event-driven in nature, and this should be reflected inherently in the language used to define them and the tools used to build them."

[1.4] - "Async is an example of a real-time, distributed, communicating FMap structure with both asynchronous and synchronous behavior."

Disclaimer 1: This paper is not

a review of [1]. Parts of it was a catalyst to structure some of my own

thoughts, many of them are not even mine. I should say again because I have done this

over and over. The real theme of [1] I have not even mentioned: the Universal Systems Language (missing

Wikipedia page (wrong: it has arrived after my original post [wikipedia] and [12])). The

structure(?) of this post is such that I have a virtual dialogue with the

authors - based on the quotes above.

Disclaimer 2: This is not a scientific paper - it is a post on a blog.

[1.1]

- Synchronous vs. asynchronous OS

[1.1] - "The flexibility required of this missions could not have been accomplished in real time without an asynchronous, multiprogramming operating system where higher priority processes interrupt lower priority processes.

I am not certain what the authors mean with asynchronous, multiprogramming operating system as opposed to a synchronous OS.

But I will give it a try. I assume that in a synchronous OS, the processes (also called tasks) run in some sort of slot, like some milliseconds each. They have to finish their work in their slots, so that the next slot is not delayed. This is not a side effect, it's the whole idea. One process then is not able to interrupt any other - since they run in sequence. Maybe even asynchronous I/O (like polling of interrupt sources) are assigned a slot. With such a system there is no problems with shared access, so semaphores are not needed. Things seem simple.

However, there are several process scheduling algorithms for this [wikipedia]. I assume that some of these would fall into the synchronous OS category. One such scheduling policy could be rate monotonic - where "tasks with shorter periods/deadlines are given higher priorities" [wikipedia].

I feel confident that the authors did not mean that systems - such as those programmed in Ada, with synchronous rendez-vous or channel communication schemes - constitute a synchronous OS at the scheduler level.

Or I could be wrong, and a synchronous OS is the one such that runs and schedules programs of the synchronous programming language type [wikipedia]?

Synchronized OS as using synchronous communication is probably not what they mean - but asynchronous OS and asynchronous communication might be paired in [1]. However, I hope synchronization and OS are orthogonal, also in the paper.

[Def 1] - So I hope that an "asynchronous operating system" could include an Ada-style system without any visible "OS" at all. In the following I have assumed this.

[1.1] again - Required flexibility

[1.1] - "The flexibility required of this missions could not have been accomplished in real time without an asynchronous, multiprogramming operating system where higher priority processes interrupt lower priority processes.

If the authors, with asynchronous, multiprogramming operating system mean that communication mechanism between tasks mostly is based on asynchronous messaging, then I need more explanation than [1] gives me.

However, I think that this is not what they mean. They simply mean that asynchronous OS is not synchronous OS - and that messages (events) between processes drive the system. It's not the passing of a big clockwork that displays time, like the inner machinery of Big Ben in London, where all wheels are synchronous. It's more like the combined effort of any workforce with a loose degree of central control.

[HTI]

In USL, the set of agents or resources each allocated to perform its portions of the system's functional architecture are required to enforce a strict concept of centralized control (i.e., those based on the USL axioms of control). Control is not loosened because one decides to distribute the system. In a traditional OS, "loose degree of central control" is used because it is not based on the generalized concepts of control inherent in a USL system. Because of this, protectionist mechanisms in each separate/distributed process/agent must be enforced locally. This includes specialized mechanisms for communications such as those for synchronous and asynchronous communications. In a USL functional architecture, these underlying mechanisms are transparent. In contrast, the DXecutor handles all of this for a USL functional architecture.

[/HTI]

The communication mechanism between processes is rather crucial. Some mean that asynchronous communicating (send and forget) and a common message buffer solves their need. Some would say a synchronous communication mechanism solves their need (channels or Ada-like rendez-vous). The latter is often seen as rather stiff or rigid, and I dare to say, little understood. I have written a paper about this: CSP: arriving at the CHANnel island - Industrial practitioner's diary: In search of a new fairway [2].

[HTI]

All of these mechanisms are essentially irrelevant to the concepts of asynchronous interacting distributed execution of a functional architecture written in USL. Variables in USL are asynchronously managed by parents. What this means is that when a variable of a producer action gets its value, all functions (specifically, their corresponding actions) are instantiated. The only synchronization that might occur is due to some agent (or resource singularity). For example, a DXecutor on a traditional OS (e.g., Linux), could use asynchronous and synchronous sends and receives to implement its process communications. But, the DXecutor has the knowledge built into the functional architecture to know where the target consumer actions are that will receive the producer's variable's value. Note, that all communications in this sense are fully automated - meaning that no communications functions are needed in a functional architecture to define communications or coordination due to that communication.

[/HTI]

Using an asynch message scheme would cause a process to have to know how the internal programming in the other processes work. Here is a quote from [3] - which discusses an alternative to the non-Ada type communication scheme, the Java monitor synchronization mechanism:

One

crucial benefit of CSP is that its thread semantics are compositional (i.e.

WYSIWYG), whereas monitor thread semantics are context-sensitive (i.e.

non-WYSIWYG and that's why they hurt!). Example: to write and understand one

synchronized method in a (Java) class, we need to write and understand all the

synchronized methods in that class at the same time -- we can't knock them off

one-by-one! This does not scale!! We have a combinatorial explosion of

complexity!!!

CSP is not out of scope here - Ada's rendes-vous is based on CSP [5]. The WYSIWYG is also discussed in [4].

Bear in mind that communication scheme and degree of synchronization are closely related. With zero buffered synchronous channels, communication is synchronization. With buffered asynchronous-until-full channels, synchronization happens when the channel reaches capacity and becomes synchronous. When the channel size is infinite, synchronization never happens - or it does not happen before a sender at application level waits for a response, i.e. inserts synchronization.

Different programmers tend to chose different methodologies here. Or the traditions and earlier usage in different companies. Or changing times. My previous post '006' will try to discuss this.

There are also several other points, like the producer / consumer problem. Here, buffers would fill up (to overflow?) on a faster producer than consumer. Also, with messages flowing into a process, there may be no system to stop a message from arriving. With input guards and synchronous communication it's possible to close the door from a client, to finish off a session with another client - undisturbed by the fact that another message is waiting outside the door. An important building block.

[HTI]

Producers and consumers are completely specified in the functional architecture. There can never be overflow; faster producers and slower consumers are never an issue. This is because all actions are totally ordered using priorities and all data flows are discrete in regard to an instantiation. That is, a primitive action can only produce one value for each of its output object states. And, each object state activates only one primitive action.

[/HTI]

However, since the world is basically asynchronous, it is important that programmers know the different tools and methodologies. Synchronous communication may be built on top of an asynchronous layer - and opposite. I will come back to this. Deadlock (synchronous) and pathological buffer overflow (asynchronous) must be avoided. This is exciting!

[HTI]

These problems are inherently eliminated with USL.

[/HTI]

[1.2]

- Loosely coupled development teams

[1.2] - "To our surprise, changing from a synchronous OS used in unmanned missions to an asynchronous OS in manned missions supported asynchronous development of the flight software as well."

If one of the systems I have been working on is in fact an asynchronous OS, but with synchronous communication (channels) layered on top - and that is the same as the above (assuming [Def 1]), we probably have experienced the same. I have written a paper about this: High Cohesion and Low Coupling: the Office Mapping Factor [6].

I argue that the higher cohesion in each process (they each do a well defined job), and the less coupling (with synchronous communication and WYSIWYG semantics) - the easier it seemed to be for programmers to agree on the interface contract (the message sequences or protocol) - and then enter the office and do the job. There was little need to discuss any more.

My paper is a case observation from industry - so the Office Mapping Factor is only a hypothesis until somebody takes the token and carries on.

In [1.2] there is a term, asynchronous development, which needs a definition. I think they talk about decoupling of the development members and teams as much as possible from each other. My gut feeling is that this is the same as my Office Mapping Factor. However, if they base their software on asynchronously communicating processes - then, they would perhaps get even better yield by using [Def 1] - Ada-type communication.

If the Ada designers had used named rendez-vous or named channels to communicate between processes, instead of named endpoints, it would have been even easier to build good libraries. C.A.R. Hoare, in his second CSP book of 1985 (ref. in [5]) took the channels to be named entities that could be ripped up and placed anywhere - in run-time. A process communicates on a named channel (like on an ip-address), not with another named process. This was about the same time that that Hoare (with fellows at Oxford University) together with people at Inmos (David May etc.) had built a runnable instance of CSP, the programming language occam [7] and a processor for it, called a transputer. It would be wrong of me not to admit that it is from those sources and fellow admirers I have been drinking, from about 1980 to this very day.

This would make the programmer's interfaces (API) better, and well defined message sequences with WYSIWYG semantics - tools for getting "asynchronous development" with high Office Mapping Factor.

[1.3]

- Systems are increasingly asynchronous

[1.3] - "Lessons learned from this effort continue today: Systems are asynchronous, distributed, and event-driven in nature, and this should be reflected inherently in the language used to define them and the tools used to build them."

Now I really begin to doubt that [Def 1] is anything but a misinterpretation in the context of [1]. Because, yes, there is a trend. I saw a web page of a high profile lecturer who should have a one day course. One of the points was Running tasks in isolation and communicate via async messages. Then I queried on a discussion group, and one in the community said that:

"Most likely it's the fire and forget and hope the buffer doesn't overflow variety - Erlang style concurrency. This seems to be the current method everyone is interested in (Erlang, Scala actors, F# Async Mailboxes, Microsoft Concurrency and Coordination Runtime). There is sometimes some form of guard available, be it one that allows checking of the incoming message, or selection of one from multiple inputs."

Maybe this is what the authors refer to. Or something along that line.

[HTI]

No. See comments above on the DXecutor which supports USL runtime semantics.

[/HTI]

When they talk about language, I am sure they mean the language described in [1], USL. With solid semantics, and if the semantics is well enough defined, it does not matter if it's based on synchronous or asynchronous communication? Like, Harel State Charts in UML are based on asynchronous communication between state machines. And it's pretty solid, and it doesn't really void formal verification, it seems. Of course it is possible to build good systems with this, both unmanned and manned missions of any literal kind.

[HTI]

See SysML paper. It does matter; UML is not an asynchronous event driven language. It is a traditional sequential/synchronous language with asynchronous and synchronization operators for communications.

[/HTI]

But maybe, if the same designers knew as well about the [Def 1] style systems as they do with the asynch OS / asynch communication schemes, we would get even better systems? To introduce a world where it's understood that you cannot simulate or visualize a system to be error free. Some tools now do verification: IAR's Visual State (of UML state machines), Spin (of Promela models) and FDR2 (of CSP), as well as LTSA (of FSP) analysis, see [8]- [11].

[1.4] - Joining heads for synch and asynch?

[1.4] - "Async is an example of a real-time, distributed, communicating FMap structure with both asynchronous and synchronous behavior."

Now, what does this mean? If it's asynchronous OS and synchronous OS behavior, it would sound strange to me. According to Turing, any machine can run on any other. Laid out here: asynch or synch OS may be built on each other. So, the behaviour should really be the same? If MS Word runs on Mac OS X or Windows the behaviour is the same? (well, almost..)

Instead, I have a hypothesis that they this time talk about asynchronous and synchronous communication behaviour? Then it makes more sense to me to talk about different behaviour.

Any software engineer should know these methodologies (tools), know the strengths and weaknesses of each. When it's appropriate to use one instead of the other, and how combinations could be used.

I will not sum up here. I have glimpsed through some points, seriously tearing and wearing on the [1] paper, and used it as a catalyst. I have written about these things for years. Being incomplete as it is, there may be some points in some of my papers, at http://www.teigfam.net/oyvind/pub/pub.html. Maybe I will try to sum up in a future posting.

References

[1] - Universal Systems Language: Lessons Learned from Apollo. Margaret H. Hamilton and William R. Hackler, Hamilton Technologies, Inc. In IEEE Computer, Dec. 2008 pp. 34-43 ("USL")

[2] - CSP: arriving at the CHANnel island - Industrial practitioner's diary: In search of a new fairway. Øyvind Teig, Navia Maritime AS, division Autronica. In "Communicating Process Architectures", P.H. Welch and A.W.P. Bakkers (Eds.), IOS Press, NL, 2000, Pages 251-262, ISBN 1 58603 077 9. CPA 2000 conference (In the series: "WoTUG-23"), Communicating Process Architectures, University of Kent at Canterbury, UK, 10-13. Sept. 2000. Read at my home page at http://www.teigfam.net/oyvind/pub/pub_details.html#CSP:arriving_at_the_CHANnel_island

[3] - Letter to Edward A. Parrish, The Editor, IEEE Computer. Peter Welch (University of Kent, UK) et al. http://www.cs.bris.ac.uk/~alan/Java/ieeelet.html (1997)

[4] - A CSP Model for Java Threads (and Vice-Versa). Peter Welch. Jeremy M. R. Martin. Logic and Semantics Seminar (CU Computer Laboratory) (2000) - http://www.cs.kent.ac.uk/projects/ofa/jcsp/csp-java-model-6up.pdf

[5]- CSP - Communicating sequential processes - http://en.wikipedia.org/wiki/Communicating_sequential_processes

[6] - High Cohesion and Low Coupling: the

Office Mapping Factor. Øyvind Teig, Autronica

Fire and Security (A UTC Fire and Security company). In Communicating Process

Architectures 2007

Alistair McEwan, Steve Schneider, Wilson Ifill and Peter Welch (Eds.). IOS Press, 2007 (pages 313-322). ISBN 978-1-58603-767-3. © 2007 The authors and IOS Press. Read at http://www.teigfam.net/oyvind/pub/pub_details.html#TheOfficeMappingFactor

[7] - The occam programming language - http://en.wikipedia.org/wiki/Occam_(programming_language)

[8] - IAR's Visual State (of UML state machines) - http://en.wikipedia.org/wiki/IAR_Systems

[9] - Spin (of Promela models) - http://en.wikipedia.org/wiki/Promela

[10] - FDR2 (of CSP models) - http://www.fsel.com/

[11] - LTSA (of FSP models) - http://www-dse.doc.ic.ac.uk/concurrency/

[12] - Universal Systems Language - http://en.wikipedia.org/wiki/Universal_Systems_Language